AI in Cybersecurity: How Large Language Models Are Changing the Threat Landscape

Since late 2022, we’ve seen a dramatic rise of Large Language Models (LLMs) based AI in the form of ChatGPT (Generative Pre-trained Transformer) and its cousins. There’s been quite a lot written about how these tools will impact cyber security.

In Hornetsecurity’s 2024 survey, a staggering 45% of business leaders voiced concerns about AI exacerbating the threat landscape. This alarming trend mirrors the global rise of AI-driven malicious activities, with threat actors leveraging automation and sophistication to orchestrate attacks.

The UK’s National Cyber Security Centre (NCSC) has also noted a troubling consequence: AI is democratizing cybercrime, enabling even novice criminals to engage in sophisticated attacks previously reserved for seasoned adversaries.

It is difficult to ascertain with a high degree of certainty if malicious emails were created or enhanced by LLMs, primarily because if they’re good, they’ll look indistinguishable from a well (hand) crafted phishing email.

However, these are the areas where we know that LLMs are having an impact on cyber security:

Code quality: GitHub Copilot (and other similar tools) is showing some quite astonishing improvements in productivity for developers, both beginners and seasoned hands. While there are safeguards in place to stop these tools developing obvious malware they can be circumvented, so it’s very likely that malware developers are using these tools to crank out more malicious code faster.

Sophisticated phishing: Drafting and enhancing phishing and especially spear phishing emails. We have an example of one of these below, but it’s probable that criminals are using these tools to fine tune their wording to achieve maximum results. Again, various LLMs have safeguards in place to stop these sorts of malicious uses, but they can often be bypassed. There are also GPT tools that lack these safeguards, such as WormGPT and others. Hornetsecurity’s 2024 survey revealed that 3 in 5 businesses describe AI-enhanced phishing attacks as their top concern.

Translating attacks into other languages: Many Phishing and Business Email Compromise (BEC) defenses are tuned for English, having less success stopping attacks in other languages. There are also geographies around the world where phishing and BEC attacks have been uncommon up until now, making the average finance department worker less suspicious (Japan, other countries in East Asia, and Latin America comes to mind). Here, we’re likely to see a surge in attacks based on the ability to translate emails into near perfect prose, by attackers who aren’t fluent in the language, expanding their potential target pool manyfold.

Targeted research: To pull off a successful spear-phishing attack, or social engineering phone call attack on helpdesk staff, requires detailed understanding of a company, individuals that they’re impersonating and their relationship to others in the hierarchy. Traditionally this is often done through LinkedIn, company websites research and the like, but with the advent of LLM based search engines, this is changing. As you’ll see in our example below, AIs can help immensely with this task, and shorten the time investment required.

To demonstrate how easy it is to generate a phishing email through an LLM we decided to create our own. The following is an attack on Andy Syrewicze, a Technical Evangelist here at Hornetsecurity. Here is the initial research prompt and output:

As you can see, a simple prompt provides a detailed breakdown of a social engineering strategy to target Andy drawing on his professional and personal online footprint. Something that would take far longer to achieve manually.

This is then followed up with a very convincing draft of a spear-phishing email for Andy.

The email generated here is of a much higher quality than the average phishing email and far more likely to succeed. The personalization of the references and context demonstrates how effective AI tools such as LLMs can be in crafting targeted spear-phishing attacks.

Why We Fall for Scams

A thorough investigation of social engineering and hacking human psychology is a topic for an entire book on its own, here we’ll just focus on the highlights to bring an understanding of the basic characteristics that make us so susceptible.

A well-crafted phishing email has the following characteristics:

- It’ll blend in and be part of the normal communication flow. We’re used to receiving emails about a parcel delivery, or a notification from our bank, or a reminder from our boss, so a fake email with the same characteristics is less likely to raise our suspicions. It has the right logos, structure, format, and it looks like the expected sender so we’re more likely to take the requested action.

- It’ll appeal to our emotions. The most important part of any social engineering endeavor is to bypass the cold, logical thinking part of our mind (Cerebrum), and activate the emotions and the “fight or flight” center (Amygdala) so that we take actions we wouldn’t normally contemplate. Some approaches will appeal to greed / reward (“click here for free tickets”), some to shame / embarrassment (“I’ve got video recordings of what you did last night”), or fear / dread (“I need you to transfer this amount now or you’ll be fired”). The most common appeal is urgency; when something needs to be done “right now”, we tend to skip past our normal, suspicious questions and just get it done, often to avoid feeling the uncomfortable emotions mentioned any longer.

- It’ll have a requested action that’s not too unusual. Examples include providing personal details to your “bank”, something we remember having to do when opening an account in a new bank or resetting our network password by clicking a link and being presented with a normal looking sign-in page.

The whole effect of an effective phishing lure is short-circuiting our questioning rational mind by invoking emotions and urgency and providing an easy way to “fix the issue” quickly.

This leads us neatly to the next step – the importance of security awareness training for all your users.

User Training is Crucial

This cannot be understated; you cannot build a cyber-resilient organization without involving every single person who works there. This starts with the basic awareness of asking someone unknown who isn’t wearing a badge in the office to identify themselves, and if the answer doesn’t stack up, calling security.

When someone calls you claiming to be from the IT helpdesk and asks you to approve the MFA prompt you’re about to receive on your phone, don’t assume they’re telling the truth. Always double-check their credentials first to ensure that it’s a legitimate request.

What you’re trying to foster is “polite paranoia”, making it normal to question unusual requests, and understanding the risk landscape and sharpening instincts. Most people who work in businesses aren’t cyber or IT savvy and weren’t hired for those skills. However, everyone needs to have a basic understanding of how identity theft works in our modern digital world, both in their personal and professional lives.

They also need to have a grasp of the business risks introduced by digital processes, including emails.

By having this context they’ll be able to understand when things are out of context or unusual and have enough suspicion to ask a question or two before clicking the link, wiring the funds, or approving the MFA prompt.

And this isn’t a once-off tick on a form to achieve compliance with a regulation.

Often, the long, tedious, and mandatory presentations that organizations conduct once a year or quarterly, followed by multiple-choice quizzes, are perceived as time-wasters by the staff. They want to rush through them quickly and typically forget any insights gained.

Instead, the training program should be designed to be ongoing, consisting of bite-sized, interesting, immediately applicable, and fun training modules combined with simulated phishing attacks to test users. If any user clicks on a phishing email, they should be given additional training.

Over time, the system should automatically identify users who rarely fall for such attacks and interrupt them with infrequent training, while the persistent offenders are given additional training and simulations on a regular basis.

The other reason for ongoing training is that the risk landscape is continuously changing. Some months ago, malicious emails with QR (Quick Response) codes to scan were the exception, now they’re a very familiar sight, requiring ongoing awareness of staff not to scan them on their phones (outside of established business processes).

Security experts often lament the priorities of staff, saying, “if they only took a second to read the email properly, they’d spot the signs that it’s phishing”, or “they just don’t take security seriously”.

This is a fundamental misunderstanding of the priorities and psychology of the average office worker, clicking a link in an email will at most get you a slap on the wrist, not fulfilling an urgent request by the boss can get you in serious trouble or even fired.

And this is why the entire leadership, from middle managers all the way to the C-suite must lead by example. If they do and communicate their understanding of the basics and secure processes, staff will follow suit.

But if the CFO requests an exemption from MFA or bypasses security controls regularly because “it’s more efficient”, there’s no chance that his underlings will take cyber security seriously.

A Day in the Life at Cyber Resilient Inc.

What does it look like at an organization that has embraced this approach? First of all, no one fears speaking up or asking “silly questions” about weird emails or strange phone calls. If there is an incident and someone clicks something they shouldn’t have, there’s no blaming and accusations, it’s not personal, there was a failure of a process.

This brings a strong sense of psychological safety, an important foundation for cyber resiliency.

Transparency is promoted from the leadership all the way throughout the organization. Understanding that we’re all human, we’re “all in this together” and being upfront about making mistakes, without fear of retribution, will improve the cyber resiliency culture.

Talking about new cyber risks and exploring not just business risks but also the risks in people’s personal lives is another strong result of a good security culture.

Our working and personal lives are blended like never before, with people sending and receiving emails from their personal devices, sometimes even working from their personal laptops (BYOD), which means that the risks to the business aren’t confined to corporate assets and networks.

Compromises of users’ personal identities can be used by criminals to then pivot to compromise business identities and systems.

Looking at it in the mirror – in an organization where cyber resiliency isn’t valued, staff will be fearful of making mistakes and be unsure what processes to follow if they think they might have made one. Individuals are blamed when incidents do occur, ensuring that any future issues are swept under the rug to avoid the same fate.

And staff don’t understand IT, they don’t understand the risk landscape and they routinely put the organization at risk because of this lack of understanding.

Implementing Security Awareness Service

As mentioned, it’s important that security awareness training is incorporated into the work life of your users, it can’t be something that’s done once every six or twelve months. Hornetsecurity’s Security Awareness Service was designed with exactly this in mind, providing short video trainings, coupled with spear phishing simulations.

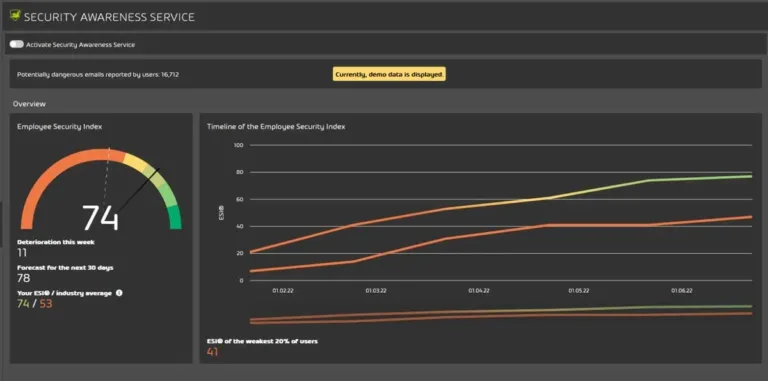

But overworked IT teams also don’t want to spend a lot of time on scheduling training and simulations, so it incorporates the Employee Security Index (ESI) which measures each user’s (and group, department) likelihood to fall for targeted, simulated, attacks.

This is mostly hands-off for the administrators, so the users who need extra training and tests receive it, whereas staff with already sharp instincts are tested less frequently. You can also track ESI over time and see the forecast for it.

There’s also a gamification aspect where users can compare themselves to others, which creates a strong incentive to be more cautious and sharpen instincts. The training material is available in multiple languages.

Another benefit of the Security Awareness Service is the statistics, it gives the security teams and business leaders data to understand the current risk profile of their staff, and where boosts of extra training might need to be deployed.

Enhance employee awareness and safeguard critical data by leveraging Hornetsecurity’s Security Awareness Service for comprehensive cyber threat education and protection.

We work hard perpetually to give our customers confidence in their Spam & Malware Protection and Advanced Threat Protection strategies.

Discover the latest in cybersecurity: How to Spot a Phishing Email in The Age of AI. Learn how AI fuels sophisticated phishing attacks and gain actionable insights to protect your business.

To keep up to date with the latest articles and practices, pay a visit to our Hornetsecurity blog now.

Conclusion

Everyone in business today is somewhat aware of the risks of cyber-attacks, phishing messages, and identity theft. It’s essential for businesses to recognize that cybersecurity threats are constantly evolving, especially in the age of AI.

Threat actors are leveraging AI tools to create sophisticated phishing attacks that can lead employees to click on malicious links or disclose sensitive information. The phishing samples we’ve shared should serve as a good source for communicating the signs of scam emails to your staff.

FAQ

LLMs, such as ChatGPT, have significantly altered the threat landscape by enabling automation and sophistication in malicious activities. They’ve democratized cybercrime, allowing even novice criminals to conduct sophisticated attacks. Specifically, LLMs are enhancing code quality, refining phishing emails, translating attacks into multiple languages, and facilitating targeted research for social engineering attacks.

Successful phishing emails blend seamlessly into normal communication flows, evoke emotions such as greed, shame, or fear, and prompt urgent actions. They mimic the appearance of legitimate messages, utilize familiar logos and formats, and contain requests that seem plausible, like providing personal details or clicking on links.

Organizations can enhance cyber resilience through comprehensive user training, which fosters a culture of “polite paranoia” and encourages questioning unusual requests. Continuous, engaging, and practical training modules combined with simulated phishing attacks help users recognize and respond to threats effectively. Leadership plays a crucial role in setting the tone for security awareness and adherence to secure processes throughout the organization.