The Foolproof Method of Maintaining your Backup System

As you might expect, setting up backup is just the beginning. You will need to keep it running into perpetuity. Similarly, you cannot simply assume that everything will work. You need to keep constant vigilance over the backup system, its media, and everything that it protects.

Monitoring Your Backup System

Start with the easiest tools. Your backup program almost certainly has some sort of notification system. Configure it to send messages to multiple administrators. If it creates logs, use operating system or third-party monitoring software to track those as well. Where available, prefer programs that will repeatedly send notifications until someone manually stops it or it detects problem resolution.

Set up a schedule to manually check on backup status. Partially, you want to verify that its notification system has not failed. Mostly, you want to search through job history for things that didn’t trigger the monitoring system. Check for minor warnings and correct what you can. Watch for problems that recur frequently but work after a retry. These might serve as early indications of a more serious problem.

Testing Backup Media and Data

You cannot depend on even the most careful monitoring practices to keep your backups safe. Data at rest can become corrupted. Thieves, including insiders with malicious intent, can steal media. You must implement and follow procedures that verify your backup data. After all, a backup system is only valuable if the data can be restored when needed.

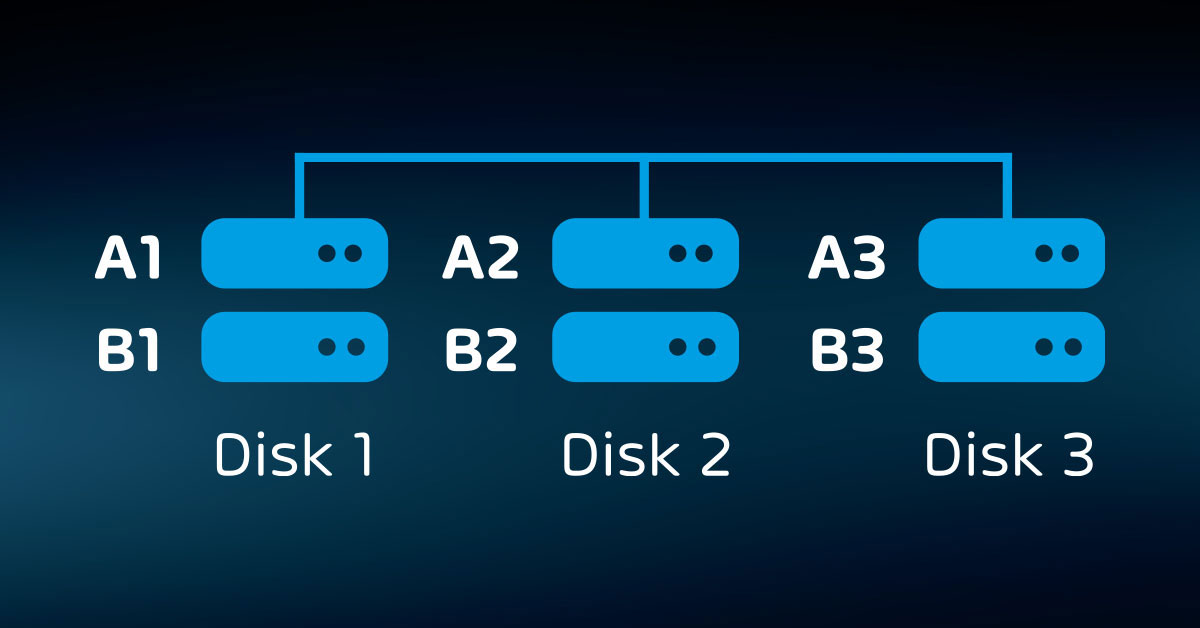

Keep an inventory of all media. Set a schedule to check on each piece. When you retire media due to age or failure, destroy it. Strong magnets work for tapes and spinning drives. Alternatively, drill a hole through mechanical disks to render them unreadable. Break optical media and SSDs any way that you like.

Organizations that do not track personal or financial information may not need to keep such meticulous track of media. However, anyone with backup data must periodically check that it has not lost integrity. The only way you can ever be certain that your data is good is to restore it.

Establish a regular schedule to try restoring from older media. If successful, make spot checks through the retrieved information to make sure that it contains what you expect.

Use this article as a basic discussion on testing best practices. We will revisit the topic of testing in a dedicated post towards the end of this article series.

The activities in this article will take time to set up and perform. Do not allow fatigue to prevent you from following these items or tempt you into putting them off. You need to:

- Configure your backup system to send alerts on failed jobs at least

- Establish an accountability for manually verifying that the backup program is functioning on a regular basis;

- Configure a monitoring system to notify you if your backup software ceases running;

- Establish a regular schedule and accountability system to test that you can restore data from backup. Test a representative sampling of online and offline media.

Too many organizations do not realize until they’ve lost everything that their backup media did not successfully preserve anything. Some have had backups systems sit in a failed state for months without discovering it. A few minutes of occasional checking can prevent such catastrophes.

Monitoring backup, especially testing restores, is admittedly tedious work. However, it is vital. Many organizations have suffered irreparable damage because they found out too late that no one knew how to restore data properly.

Maintaining Your Systems

The intuitive scope of a business continuity plan includes only its related software and equipment. When you consider that the primary goal of the plan is data protection, then it makes sense to think beyond backup programs and hardware. Furthermore, all the components of your backup belong to your larger technological environment, so you must maintain it accordingly.

Fortunately, you can automate common maintenance. Microsoft Windows will update itself over the Internet. The package managers on Linux distributions have the same ability. Windows also allows you to set up an update server on-premises to relay patches from Microsoft. Similarly, you can maintain internal repositories to keep your Linux systems and programs current.

In addition to the convenience that such in-house systems provide, you can also leverage them as a security measure. You can automatically update systems without allowing them to connect directly to the Internet. In addition to software, keep your hardware in good working order.

Of course, you cannot simply repair modern computer boards and chips. Instead, most manufacturers will offer a replacement warranty of some kind.

If you purchase fully assembled systems from a major systems vendor, such as Dell or Hewlett-Packard Enterprise, they offer warranties that cover entire systems as a whole. They also have options for rapid delivery or in-person service by a qualified technician. If at all possible, do not allow out-of-warranty equipment to remain in service.

Putting It into Action

Most operating systems and software have automated or semi-automated updating procedures. Hardware typically requires manual intervention. It is on the system administrators to keep current.

- Where available, configure automated updating. Ensure that it does not coincide with backup, or that your backup system can successfully navigate operating system outages.

- Establish a pattern for checking for firmware and driver updates. These should not occur frequently, so you can schedule updates as one-off events.

- Monitor the Internet for known attacks against the systems that you own. Larger manufacturers have entries on common vulnerabilities and exposures (CVE) lists. Sometimes they maintain their own, but you can also look them up at: https://cve.mitre.org/. Vendors usually release fixes in standard patches, but some will issue “hotfixes”. Those might require manual installation and other steps.

- If your hardware has a way to notify you of failure, configure it. If your monitoring system can check hardware, configure that as well. Establish a regular routine for visually verifying the health of all hardware components.

To properly protect your virtualization environment and all the data, use Hornetsecurity VM Backup to securely back up and replicate your virtual machine.

We ensure the security of your Microsoft 365 environment through our comprehensive 365 Total Protection Enterprise Backup and 365 Total Backup solutions.

For complete guidance, get our comprehensive Backup Bible, which serves as your indispensable resource containing invaluable information on backup and disaster recovery.

To keep up to date with the latest articles and practices, pay a visit to our Hornetsecurity blog now.

Final Words

Maintenance activities consume a substantial portion of the typical administrator’s workload, so these procedures serve as a best practice for all systems, not just those related to backup. However, since your disaster recovery plan hinges on the health of your backup system, you cannot allow it to fall into disrepair.

FAQ

What is a data backup system?

A data backup system is a method or process designed to create and maintain duplicate copies of digital information to ensure its availability in the event of data loss, corruption, or system failures.

What is an example of a data backup?

An example of a data backup is storing copies of files, documents, or entire systems on external hard drives, cloud services, or other storage media. This safeguards against potential data loss and facilitates recovery if the original data is compromised.

How do companies backup their data?

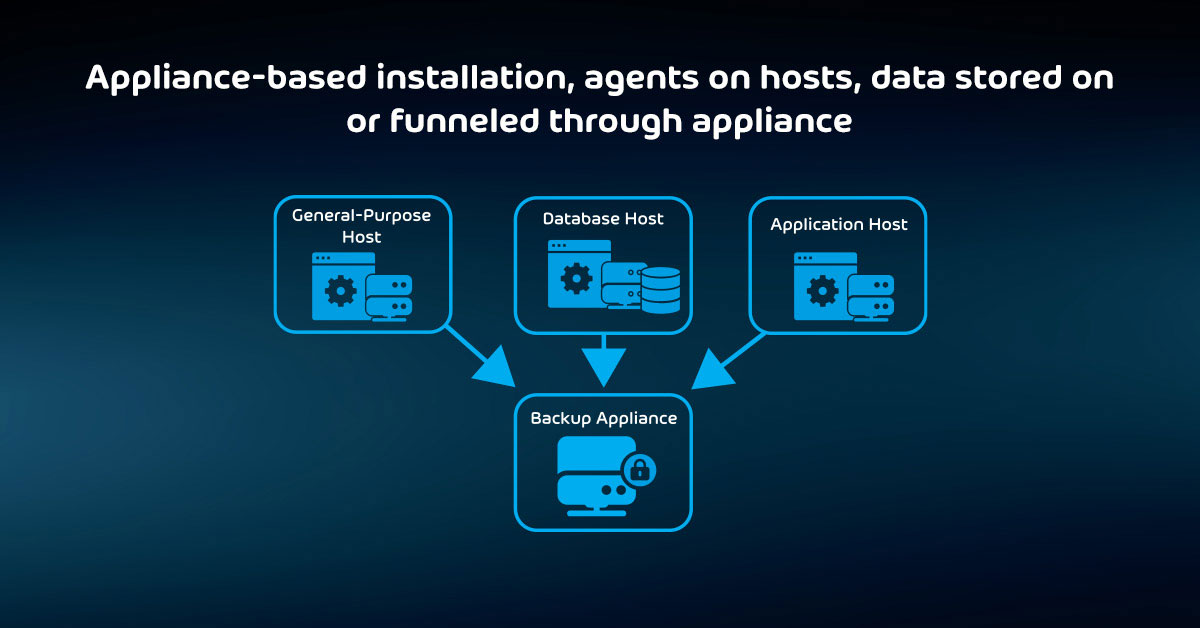

Companies use a variety of methods to backup their data, including regular backups to external servers, cloud-based solutions, tape drives, or redundant storage systems. Automated backup software is often employed to streamline and schedule the backup process, ensuring data integrity and accessibility.

Hornetsecurity, as cloud security experts, is here to assist global organizations and empower IT professionals with the necessary tools, all delivered with a positive and supportive attitude.